AirBnB

source : https://github.com/msa-ez/airbnb_project

source : https://github.com/msa-ez/airbnb_project

Accommodation reservation(AirBnB)

This example is configured to cover all stages of analysis/design/implementation/operation including MSA/DDD/Event Storming/EDA. It includes example answers to pass the checkpoints required for the development of cloud-native applications.

service scenario

Cover AirBnB

functional requirements

1. Register/modify/delete the accommodation for the host to rent.

2. The customer selects and makes a reservation.

3. Payment is made at the same time as the reservation.

4. When a reservation is made, the reservation details (Message) are delivered.

5. The customer may cancel the reservation.

6. If the reservation is canceled, a cancellation message (Message) is delivered.

7. You can leave a review on the property.

8. You can check the overall accommodation information and reservation status on one screen. (viewpage)

Non-functional requirements

1. Transactions

2. Reservations without payment should not be established. (Sync call)

3. Failure isolation

4. Reservation should be available 24 hours a day, 365 days a year even if accommodation registration and message transmission functions are not performed Async (event-driven), Eventual Consistency

5. When the reservation system is overloaded, users are temporarily shut down. Inducing them to do it after a while without receiving it Circuit breaker, fallback

6. Performance

7. It should be possible to check all room information and reservation status at once (CQRS)

8. It should be possible to give a message whenever the reservation status changes Event driven)

CheckPoint

-

analytical design

-

Event Storming:

- Do you properly understand the meaning of each sticker color object and properly reflect it in the design in connection with the hexagonal architecture?

- Is each domain event defined at a meaningful level?

- Aggregation: Are Commands and Events properly grouped into ACID transaction unit Aggregate?

- Are functional and non-functional requirements reflected without omission?

-

-

Separation of subdomains, bounded contexts

-

Is the sub-domain or Bounded Context properly separated according to the team's KPIs, interests, and different distribution cycles, and is the rationality of the separation criteria sufficiently explained?

- Separation of at least 3 services

- Polyglot design: Have you designed each microservice by adopting various technology stack and storage structures according to the implementation goals and functional characteristics of each microservice?

- In the service scenario, for the use case where the ACID transaction is critical, is the service not excessively and densely separated?

-

-

Context Mapping / Event Driven Architecture

- Can you differentiate between task importance and hierarchy between domains? (Core, Supporting, General Domain)

- Can the request-response method and event-driven method be designed separately?

- Fault Isolation: Is it designed so that the existing service is not affected even if the supporting service is removed?

- Can it be designed (open architecture) so that the database of existing services is not affected when new services are added?

- Did you design the Correlation-key connection to connect the event and the policy properly?

-

Hexagonal Architecture

- Did you draw the hexagonal architecture diagram according to the design result correctly?

-

avatar

-

[DDD] Was the realization developed to be mapped according to the color of each sticker and the hexagonal architecture in the analysis stage?

- Have you developed a data access adapter through JPA by applying Entity Pattern and Repository Pattern?

- [Hexagonal Architecture] In addition to the REST inbound adapter, is it possible to adapt the existing implementation to a new protocol without damaging the domain model by adding an inbound adapter such as gRPC?

- Is the source code described using the ubiquitous language (terms used in the workplace) in the analysis stage?

-

Implementation of service-oriented architecture of Request-Response method

- How did you find and call the target service in the Request-Response call between microservices? (Service Discovery, REST, FeignClient)

- Is it possible to isolate failures through circuit breakers?

-

Implementing an event-driven architecture

- Are more than one service linked with PubSub using Kafka?

- Correlation-key: When each event (message) processes which policy, is the Correlation-key connection properly implemented to distinguish which event is connected to which event?

- Does the Message Consumer microservice receive and process existing events that were not received in the event of a failure?

- Scaling-out: Is it possible to receive events without duplicates when a replica of the Message Consumer microservice is added?

- CQRS: By implementing Materialized View, is it possible to configure the screen of my service and view it frequently without accessing the data source of other microservices (without Composite service or join SQL, etc.)?

-

-

polyglot programming

- Are each microservices composed of one or more separate technology stacks?

- Did each microservice autonomously adopt its own storage structure and implement it by selecting its own storage type (RDB, NoSQL, File System, etc.)?

-

API Gateway

- Can the point of entry of microservices be unified through API GW?

- Is it possible to secure microservices through gateway, authentication server (OAuth), and JWT token authentication?

-

operation

-

SLA Compliance

- Self-Healing: Through the Liveness Probe, as the health status of any service continuously deteriorates, at what threshold can it be proven that the pod is regenerated?

- Can fault isolation and performance efficiency be improved through circuit breaker and ray limit?

- Is it possible to set up an autoscaler (HPA) for scalable operation?

- Monitoring, alerting:

-

Nonstop Operation CI/CD (10)

- When the new version is fully serviceable through the setting of the Readiness Probe and rolling update, it is proved by siege that the service is converted to the new version of the service.

- Contract Test: Is it possible to prevent implementation errors or API contract violations in advance through automated boundary testing?

-

Analysis/Design

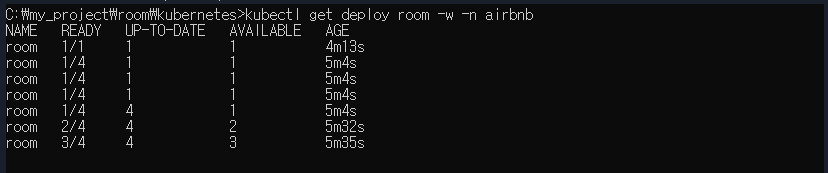

Horizontally-Aligned

TO-BE Organization (Vertically-Aligned)

Event Storming Results

Eventstorming results modeled with MSAEz

event derivation

Drop out of an ineligible event

-

Filtering out invalid domain events derived during the process

- Registration>RoomSearched, Reservation>RoomSelected: Excluded because it is a UI event and not a business event

Easy to read by attaching actors and commands

bind with aggregation

- Room, Reservation, Payment, and Review are grouped together as a unit in which transactions must be maintained by the commands and events associated with them.

Bind to Bounded Context

-

domain sequence separation

- Core Domain: reservation, room: It is an indispensable core service, and the annual Up-time SLA level is set at 99.999%, and the distribution cycle is less than once a week for reservation and less than once a month for room.

- Supporting Domain: message, viewpage: This is a service for competitiveness, and the SLA level is 60% or more per year uptime goal, and the distribution cycle is autonomous by each team, but the standard sprint cycle is one week, so it is based on at least once a week.

- General Domain: payment: It is more competitive to use a 3rd party external service as a payment service

Attach the policy (parentheses are the subject of execution, and it does not matter if you attach the policy in the second step. The entire linkage is revealed at the beginning)

Policy movement and context mapping (dashed lines are Pub/Sub, solid lines are Req/Resp)

Completed first model

- Add View Model

Verification that functional/non-functional requirements for the first complete version are

- Register/modify/delete accommodation for the host to rent (ok)

- The customer selects and makes a reservation (ok)

- Payment is made at the same time as the reservation. (ok)

- When a reservation is made, the reservation details (Message) are delivered.(?)

- The customer can cancel the reservation (ok).

- If the reservation is canceled, a cancellation message is sent.(?)

- You can leave a review on the property (ok).

- You can check the overall accommodation information and reservation status on one screen. (Add View-green Sticker is ok)

Modify the model

- The modified model covers all requirements.

Verification of non-functional requirements

- Transaction processing for scenarios that cross microservices

- Payment processing at the time of customer reservation: ACID transaction is applied by deciding that reservations that have not been paid will never be accepted. When booking is completed, check the room status in advance and process the request-response method for payment processing

- Host connection and reservation processing when payment is completed: Since the room microservice has a separate distribution cycle in the process of transferring the reservation request from reservation to the room microservice, the transaction is processed in the eventual consistency method.

- All other inter-microservice transactions: For all events such as reservation status and post-processing, it is judged that the timing of data consistency is not critical in most cases, so Eventual Consistency is adopted as the default.

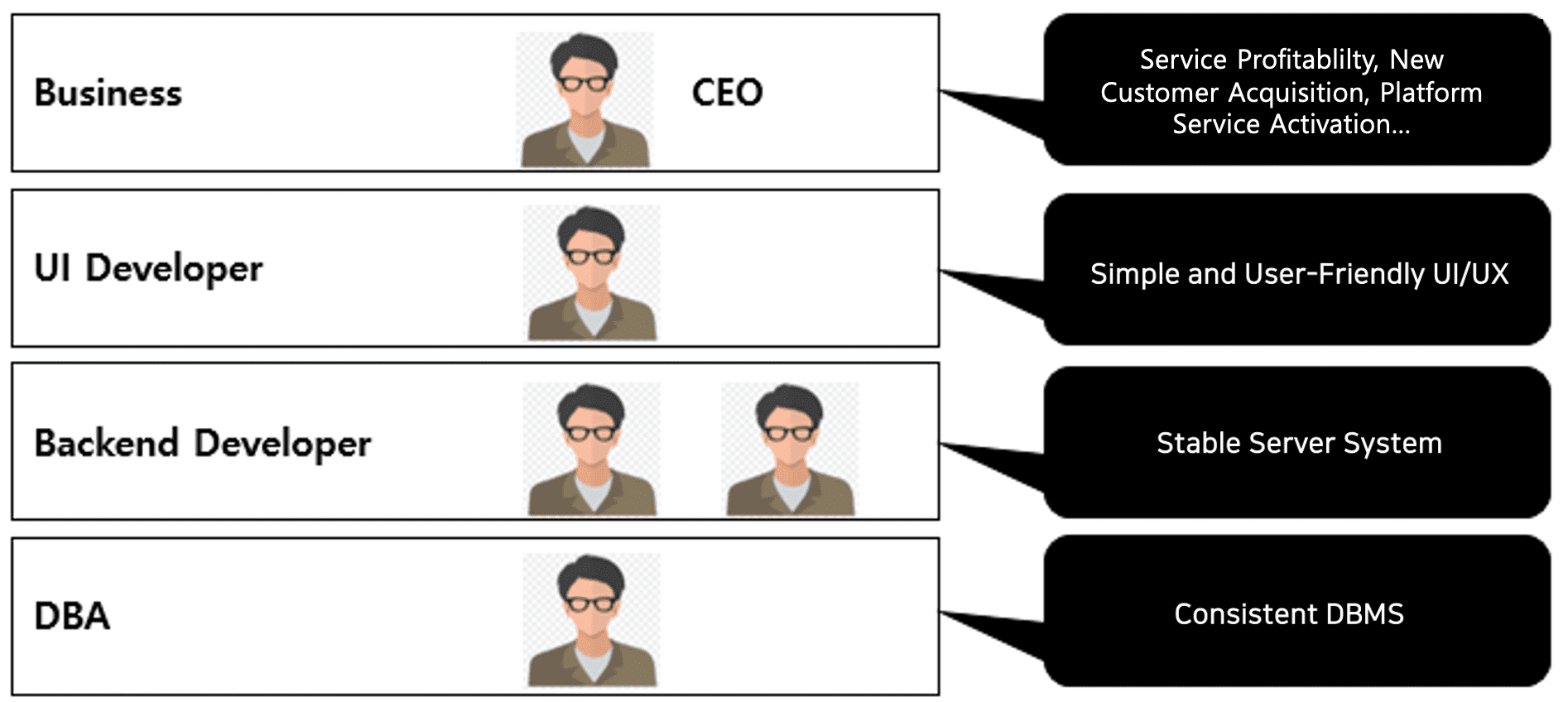

Hexagonal Architecture Diagram Derivation

- Distinguish between inbound adapters and outbound adapters by referring to Chris Richardson, MSA Patterns

- Distinguish between PubSub and Req/Resp in the call relationship

- Separation of sub-domains and bounded contexts: Each team’s KPIs share their interest implementation stories as follows

avatar

According to the hexagonal architecture derived from the analysis/design phase, microservices represented by each BC were implemented with Spring Boot. The method to run each implemented service locally is as follows (each port number is 8081 ~ 808n)

mvn spring-boot:run· CQRS

CQRS was implemented so that customers can inquire about total status such as availability of rooms, reviews and reservations/payments.

- Performance issues can be prevented in advance by integrating individual aggregate status for room, review, reservation, and payment.

- Asynchronously processed and issued event-based Kafka is received/processed and managed in a separate table

-

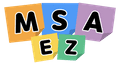

Table modeling (ROOMVIEW)

' width='1288' height='1046' xlink:href='data:image/png%3bbase64%2ciVBORw0KGgoAAAANSUhEUgAAAEAAAAA0CAYAAAA62j4JAAAACXBIWXMAABYlAAAWJQFJUiTwAAAMa0lEQVRo3s3b2ZMVxRIG8PmjfTS8Eb64huFVBPVqgKKi4IYgLojK5r7hCuKKiIq7uG99z69ivomcmu5zDl4ebkfkdJ/qqqysrKzMr7J6Vl566aVh165dwyOPPDI8/PDD6%2bixxx4bHn300fbOXVl%2bj9Xfu3dvK7/tttuGhx56aNi3b9/au8cff7y1VRaqvPK8b4L37bffvqGs9qu9PvBtfErfPXn3wAMPDM8%2b%2b%2bywcvjw4eHIkSPDO%2b%2b8M7zxxhuNXn/99eHtt99uCjh48ODw4osvDkdm9d58883hueeeG15%2b%2beWB4lK/tsPn7rvvbnXUV/bWW28Ne/bsGZ5%2b%2bunhtddeWyO8nn/%2b%2beGFF14YyKGfV155pbXtee/cuXNDWZX1qaeeGnbv3t1%2ba//qq6%2b257E25CE/eVaOHj06HDp0qL2IYMeOHWtM7rzzzuGuu%2b4arrnmmuHKK68cbrnlluFfl1wybLr%2b%2buGKK65ondQB%2bY0PBRgMPnm3bdu24aqrrhouvvjixuvWW28d/n3ttY33pZdeOmzatGm4/PLLh6uvvnq44YYbGp/KnwJqX7VPit6/f/9w4403Dpdddtlw3XXXDRdddFEr866Xk2Io3rhHFRDtYUCrLIGZmUUWgQ4cOLCUAsIrpomf5cEU8bn33nuH%2b%2b%2b/f3jyySfbu/0zMyZPP9B77rlnVAEhE2ZGt27d2vjFxPXdy0kuCtD/yjPPPDOg48ePN21VUnbixIm1O2Liob6%2bwavLp%2biUqVVelY/n8Mlzypl0z/u%2b%2b%2b7bUFZJXyg833333XX9V8Kfwiy7FWuHds12HNI6p1ScSp7zuzrIlOPDnFkLy8n7tI0z7cm71E%2b9yn/z5s0b%2bqq/00fPs6%2bffh588MHmNFeY6smTJ4evvvpq%2bPzzz4cvvvhijfz%2b7LPP1ujMmTMb6vT1v/7666aETz75ZDh79uyG98q//fbb4bvvvmt07ty54Ztvvmn8P/roow1tQpbIVL%2b17%2b%2b//76RPr788stRefVBDstgxRr56aefhgt5WWd//vnnhnIC7lxdy8ww3tvgRYInnniiKWPsIue8Cw9RhYlnUn/55ZfJ%2bn///XfzBSv%2bmAnXX3/91V64u2iJs6N9DoXDsm5EB524/vjjj9YGZdBCTDrHK/zMCNO0PDjCmOf777/f%2bPptdlx4RRZ34THPldIn6%2bQnWJ%2blsGMWvU6fPr2OVx3br7/%2b2iaiKeCHH37YoB0XczEYs9SwwCpesIY%2b/PDDdXXrMwfz22%2b/zdX%2b2PX7779PtuFUF12V7wcffDCXn3dNAf7E7Giq0qLL7Nf6fruYs2WVGcq7kFmYKs9zLwul9mV935VfrG%2bsrnIWSqkNB4iH1hgzU1jvQX31jvq6ecYHeOJgKFcZCirsAQkLdM9zqOcPW9S%2bap%2bJ85WPstx7GYNCGw7wg3MyW8yizkjVZjWzsTooJmfwP/74Y9O2Mhrn8D799NPh559/btaB%2bAR989icmLuoYEn21kH5U/Ip1188vIiGz7kZn7H6eOu/WcCYD%2bgv4ATIEaZ00itlGR/AyYGzW7ZsabN5aKZ9jhBis3naN3OOO3bsaI6MMxyLLFOXweOFDzSZDZIyzm6uDxiLAtVbYg5e3nzzzY0IqKyvX9v0UcD13nvvNW8PoSG/KbMtm5kMLABy%2b/jjj5tl9N5%2bLArkt8FwekKfuM8CoErRJlGqtl0XBawZZnmhcUAGfqEuFnghL4ow9hWzNQ8JTj2HlkGCZjRIMveQ3%2br173ve8MgiJFh5e55CreuQIG/IvMR1imCauTOrmC5SrqxS6qYdPtYhp6eNcqatHDDhR5i551OnTrXfnJG6%2bZ17lYU5175qn%2b6WlT60NTjtI3NfXzmZRMAVpjXlKLJeKiZQF27I2puCrRWUHJhBXCAq6x9OEBYpkCIIZc1SjEiR7W291J93sTLWzGFrTxnzgFDk3OAE4%2bHNiqQFzdtf243xslJTBiH5YFBRUECHC2qMXxFuIEnYIN4ZlOZMt2/f3ixFXzy2vkQKpgkiBxyhOMGe0idFamuHt2fGC%2b8KhWsbk7MOClcFxHmZZWZEk0yKoFkWZkm59T62h6hRYAr21kvIDF7PgHrPPbUXqP1Wx0vOWHbfbkMUuNC7QTO6DJQ%2bn2vRbvB8L0ppu0HZICCFU6hQNCmu3Mco6aXaBh9AJ0lT5cnYJClZf9dn98DZtA1UZt61r55qViiwu/KopJwfajlBQnMgNMIUUeBrYGNdO3XtBYamHbPyTmQBRb1TLx6ZpQFRllzgqmVlrXoWtvgOyyftw5vAeR4jPBP2jAe6lRgZqxvobFmNQmFCa2xABMIcU9pV7jcGGWB/9VBYPoHzlE%2bQinLn9E7MPDanJYcoLcfpHlyFyGZnaSg8UxYHqg/3nCt4ntqWm7wNTjCDEUt5fmYnpvP%2bgMgdd9wx3HTTTe3unbsZrJ62d4JxqEIcRypMcaBmijKDJ5gtK1GHYjje6sAWQWFWJpQCQYCOvAXZ/zEUZhVmnal6j4K0ksMj5FispdT/dyi8hgPEbHE9CcSsIwMX5pB3yMCRd%2b62r7SddpTiHbNmRRU2C52VAlmnnivcRiywL6vUQ%2bwKh3siF/kaFPaHR4%2bjCvXwEVFUDy37Nkyd6TFBpp46HB1iNYHBQX6Bx965WxKWint4A1C1r570gw8elc9YXeXkEwHnQuH/xbSy1szE7pnjS05Bx5YIQczQ0RmaVJ4sMYVwtkJzgNYyUJjlWdPZe1DAIiwyCoUrxVlE4KSTaHAMYo5BYTMd7yz54YyQl2Z5LMV5o02JOrw/oQx%2b76y%2btulnERQ26yKJhK1%2bOOg40qWhcA8xkxDJ%2bR3GMHw8/7yESG9VfZ2ark6yMn1GwNqOAsZgcJW18jBhPY9aV3hciwJAx4W8CLtoJ/ZPkiwX8hIeGxTO6bA1NnXy2p%2buzquHT06HkwXWUWBuoG3uyeYGvob609yp4/FQYHXgdtqN1c3x%2bFpWeOpcoGZRA41rZnasfs4F%2bICsOaBIRLCcPCMmmOxvMsQskdmq1%2bf0F50LaMsRCs1BsfpZeC6wTFY4JrPsyUwPhQ1u29atDUXyIxzils2b2wx4tn8Hg2WDc1KdCBCe806GDB72kHMIFN63ekI8BYXXZYVprDqLeH/Lw2DES3GYczMAs8nE%2b3ZTTpC2KSGp9Zz/m%2bkWk1dzDRwX8wRUknmuCqhOsspqcuATfGEMYEe4TVZ4rM2aE5ynAJkcYUXmp2VaZgytb2FKZDCoKuSYAvqEiIhDEcyV5RFYHbPY162/qwKWOWesVjt2fjlXActkcfrwNmUB/TtKE0o5NZssOICipdjqxqqXJQqYR3XLPmYtkxYw5QNqg6n7sidD1bKyYzszQ33WOvQHtCQzNcZ72dPhZSdxzQeMfSTVh7apbNBUGHTE1X8lloxPvhdKhjjPJ1chbEJZz3tRGEworbRUGJw6GutR21hObSo5OXY0ph%2bD5dxYnBnPrvLszOnB7uSgxHx7sExStCZxWZIwmHBolqeWwDooXH1Ab7IEzrc3BDxekhVTTqv6gAgoJPk8ZvsM80tZc6jClg%2bq7AGEPwkNewJOtuc95QTDXzThU0QpaXeZJ5utqSU1eTgaigclDIEdjOZ8gOks2gz1n8gkH5hP4RIa%2bQMWQcnqiAqJLnXzMrUZqpu206tWpb38ASuYt8lrCrDe5n1MRECMkqTQcNH2mVLPJ5Iscy3aDv/jjFCOqawf69AWNOR3zdaYndz7ujXBwdyFNEsl5QBQDlpQficZEsq7/A5vKHGsz/CvfaSfnDP2RC7AqX0sTQG8b3J8lcLcPd/4hUHufX188uVXDkO1Teosp7M5OabcU6t9eE4ZPp7DGyDr%2bwtRev2WMdbqd7JQfX3yNQUsWgIVz5/v1tWa54xA6XwSK/wcWw2Zzg8onwIozbMyTpSCsu4XJUXVYcX8lbb6z%2bey550RiiPTIc/s6xBe2qctnCEPi%2bwN8q3g2OEoJ8qxAUU2N/%2bZOVFwusHoXbtamVnVv1kBrWESfcAl%2bbA6isenP5ypX7FAk%2bQE282sJSP71DvjDU6QFioSjPOiQRWiVUshuTszqV1OX3t0SNjeUdoej%2bXoorh8fFUzRBXSLkqI1GxQwlxSalNIsCVEmFnNB1SBlrlqKEzoZMYGnMHUmav1x3J1Y8%2bIVfX1p6ge0oy9x5tlmKgVcZnJ5Ju6/hu8Svk%2bsFJf30z55pfVjPHqv%2b8b62usfv4/YYqm%2bE3BeM6fNf8XhLnHny5brs0AAAAASUVORK5CYII=' /%3e%3c/svg%3e)

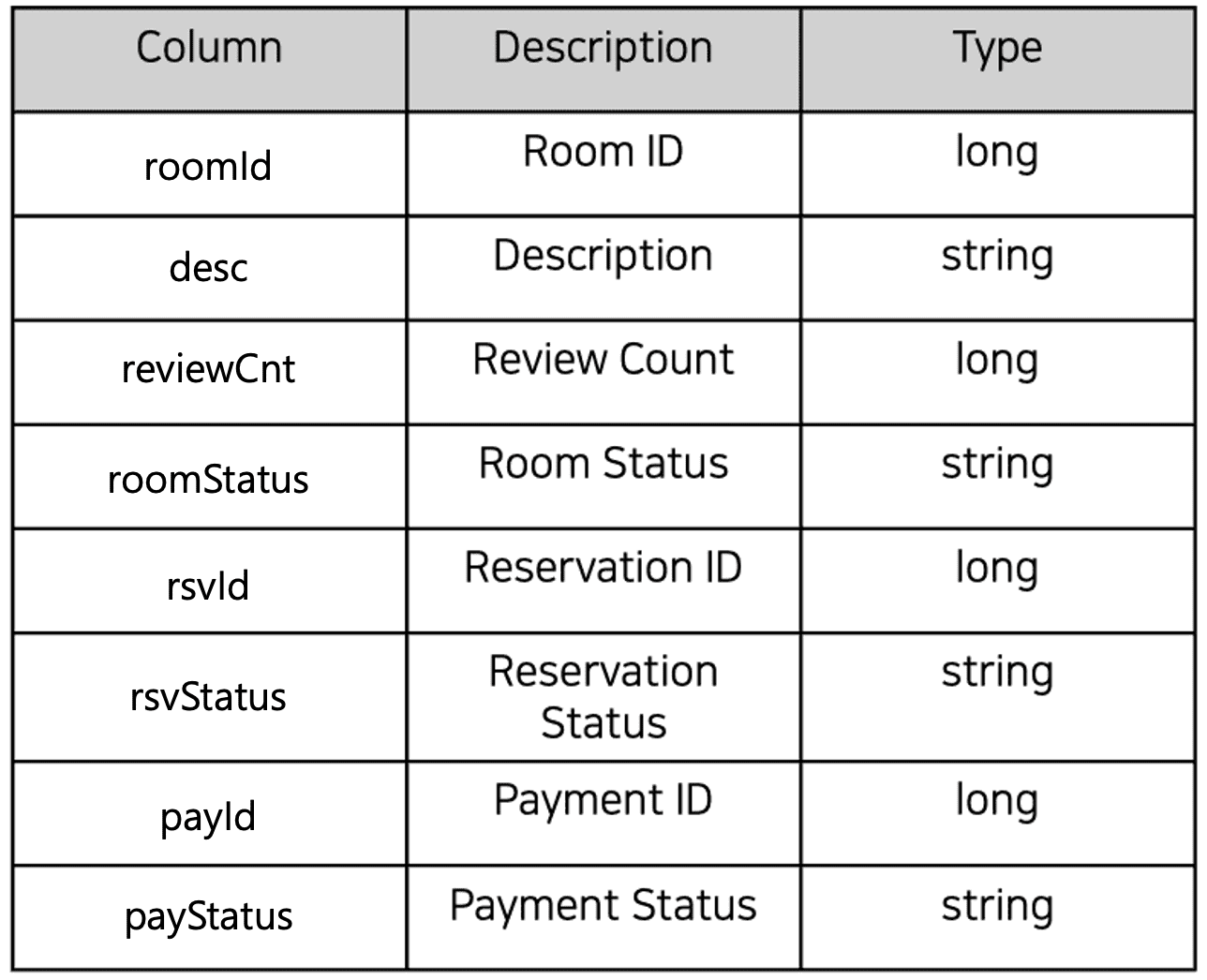

- Implemented through viewpage MSA ViewHandler (when “RoomRegistered” event occurs, it is saved in a separate Roomview table based on Pub/Sub)

' width='1630' height='1176' xlink:href='data:image/png%3bbase64%2ciVBORw0KGgoAAAANSUhEUgAAAEAAAAAuCAYAAACYlx/0AAAACXBIWXMAABYlAAAWJQFJUiTwAAANVElEQVRo3uVaaXPbyBHVb0h2LYIXThLESdy8D/HUZcmWvRunkk0qtZX8/3/w8mZAULRsbbJJ1rIrH7qm5wSmp4/XA5xd7q7w8PNPSK42GL65RnF3hcHNFsN3Nyiu14jCEFEck6KyjA5lxZ/WSbGkRJai77SMT%2bZU9bha44XoLO5HKFYzNGMfWuRBK2K0vS70IkLb6UD57nvU63XUFQX1moJGowHl1Tn5muRl33lN9okxyvk5aq%2b%2bh1LjmLqC2vkrOU60Cb5eb5RrkK9xrKIoj%2bu8AJ1pdgQrWqETTmEnKzjFFUy2dZZj2LsFnLeX6MwH6O2X6N1v4bxew7lZwabQOtOCY%2bawtwuYeQg/n2B49YDFH/6BfHOH4f4B0/d/wez9z5jc/hHj2/eIF3sMd28xuPwBxfYanuui2WpBVVW02%2b0vTmea1YPedaFZtix1m1pgWFA7JnTPgeb1oHUt6C5Lpwut14URUVscG5rdkfWStzjPQMcNYAcJzJ6LLvmuH5D34cYF%2bRBWz4Pl%2bJJs1rsdSwrgJTYvBdBqNtCkKjSphs1G/cA3S1IaJdUPfP1Atfojf6RyTkOpk6jW9fqBr0temkzVLp9xKJtN%2bSKtFxLCmaqpPA2DJ2OU5VN6rv3XkvvIm7bODT%2b%2bRLX5lxDCmaqrH79cT5B%2bLI9ttvFp2xPe7J2s4eg0pwOxzyBpXU2WJstmsyU3/NHmW%2b2XEUDHM2FabYSxBtdX4XiqLL1Ale2iLviqzeq0YffKes9Rj3w13jDa8H0NaWQiDg1JaWzKuk/BiPWiTIcXauhybuslfYBGAQiV1AxuzNbQ6T1S19GgW6rknUA/thmWJscK3uyUJHjRb3C8SgFEbgdbRoZ1HuAi8bFKPGwzH5O%2bA93ks7olGWb7ZQXQ1lo0AW7A4wZdktiIKD1Dlsd2knUou652HCv6qzEV3w1MWDxlzW7QBEjUEp3tOtc02SfWbjZaRzOoTOApnbY/5T/X90vtz6195roektkYKsOd4TswQg96nyR5R5Ym66LPi/swGQI7w1SWFkGTETJ0ujYMMf%2bwhmZxo/0B7MkendEGNkOgzTZVbUPTPo73Mv6r/%2bKk1H/jNOU6B2qfPuNQf4IzKiGczcYzbAiB21TPzjyHfX2B7nYGM4sJdHJ0ZgPoBEVqkaM/GsIgr00HUOcsCZC0YQZjxvZxAX3MsYuBxA/WcAf94j3H3KOXjtGzLLx6VZNIsC5CJsOfeIkaw6NAki2GxJZSkgizLdEveQXNc6UsRT/nSxJ9on4I33WBPM%2bJWgXaJN%2boKyUp5JWabBPPrJ77aAJmj0jwAna%2bgRVMYXR8giALxiiBJTYf9LBdFtiuM6xXA1ztR7iYpXh3t8RulWNJoc2nMSnDbBzJvgHtfjZNsF1lGBcePELrm9dL7PcTbHcTzEYRwj6BUtfE/vKWSPE1BZnyAApYEwKm1QhGQhAl0OaCiPSGh7KZsn8Ia8RD4QFYY5bLIRFoTJA1RLq6xvD2A8avPyAjnyw2iGZrpOtbRJMNxjdv6Zg9hEEITdWOpnBm9vroRlw85MO9FAaRoErk589H6BQJDKr3eBxjNIkxGEQYjmLWEyxm3DA3v7%2bcIKGDS9IQOZ1eUfQlRcwtiqGYIxIeHznnDoYiAfKRpT4SloahUyCXyKhNOp/V4VgzIzQfJgydHamFFjXMGlE4FIy9ntDkfJhxCDMhqswjmhwF6YQIh3NueEstXWJ4%2bRb94RROP4E/mMFLh4inS7iOg4DIVNf1o/md6ZoGtSWQWQ195gCbeIm0F2F3tYZJe20R3SlUOUVpHNS35GtU06qtIRAgSai2qAvnJqhSdyHpaq4ce%2bL8ZDJEVW5TldvskyXHtUV//cCL/ooXyLFxoEO9RLNEmrVS3QUJs5DmQTNoNhTZVp262HhFZwbxu6bpstKxurTVHjpmB1HK0zBN2S4kph1IP6GqTaMQjyVJ1z%2bmqq3qPyVdK%2bepT0m84OfaNfXTceqn6z6dpx3HfkxnoqPyip1Oh4lJU9I5nUdd4HWRqiqlBIWDUV59Vzqc2qNzqfqE0xGnLLSjJOWgKWXqKdZq8LQUnkhF4hmfC4Ffij4RgOBFdpa6KRKagkvHkRQD2tIU8XxD%2b3qHmM4lnq7gF1NE0w2CQvRtaWuMFGEPUzrA%2bTzDfFHQGaYYMlymaQKX0cHtOBiHI%2bRehtzNkHixDI8vlgw9J4DQ7sM1HXrrEP0kkwLINrfM8%2b8phDcYXb%2bTzqUvBMC%2b9OKS3jhFznA6X2SYTCgERg9RTic5RgyhYRjAsRwMggEGXi4FkIU5DF37ugTQbrekataFE6GTkSbAeK2Im56DCVTOpl579ZEJCMd4fl6qflUKqmLwUxMQdZEZPkV5LyoA6SmF0zLYR6cjyqPzUUuqUNcpX4WWkkrUV9WPyK8ac/oiL5oMPSOAMlFhaavw0w6CzKKnb8vLj/L0T0IOT746wXqzLkmcduUARVmFQUkHx/fSdwG/KAABSAwBODKB/z3i/kheiZm2CzcZwMsnsnSTIeyQgInhVNcNBF0fYTeE3e2gGPQJkgiIilCCpT5zin5YojER/l4yC/xlAfD0OkRn9mbG3GAFkzBVXH5ahKodL0K2vsHo7k90iLfk75HTKfZcB07PwSgYY5fu0Pd9bC/HuL6a4eZmitVFgctL5h2rMdKEyI65wecE8KWF8nkBiEigHBIQkkhUylI4xYZ0gtIhnp%2bXTvCYaJTqXWuUV%2balE1SOzrA0B0WWp7G42Xq526FnTaBybC1GhBJN6XKDwmOLOaZpHNS%2bRJHCkWkSYx%2bQGc1BO/RVaKxFCCzwv2k%2bqv8pWnwJn/CMAJoIRmsM9g%2bY//Az4sUOo9c/It8ys2Jic3Mzw2qZ4fZyivnmCtHqBtO3f8bFj3/H9O4PxAgPiBaXcr7IzIbEDMlkivvXM1xy7t3dAtfXczy8XeLmeob91Qqj4RCDopA5xHMv%2b1sI5VkN6PqxdHAim%2br1U6K%2bCcuEzs2Sji3LAoKegEDJhxeGRIMTZl9EjxSWAEV20IeXjdiWcZ2CkYRgaMExjs3MMcCAWaXICkMixziJuGZMBxkeQulzAigxyhcRgJsS%2ba1uefIf0J9coLi8R7LcIaIn325H2DENvrlmbj8VKS83ON4h2z5g9PA3jG5/wPT%2bA5L5JXPxFQWyRXKxRzjZ0hkO8PZ%2biv1%2bgOv9WKbaOU8%2bzwsKJDvmEAJQKbUyf5AAqlFmiK3/sSY8KwA7zHiCYwl1HZ5gf7KUJ2nbFvP6iPA2IcyN5WmOxxEmpCL1KIw%2b/CiV48WFRLa5Y44%2bZ77O/JxQOZmv4WcT%2bKMVuowcwwHXmmbYbccUQEDIHGPC/GHMtYd8TsqsNGHUcHq9j74l/OYCcOIhX3aPfHcv1V%2bcfjhaIAhcJjo5N59isUiR5SFm8xRrnuyQ/HQ2QJgR94/nKPb39B9XGF29Q8GQ6ecjCmaDcLCgqeyRDhPc3c7kRcrFaiA3PBrGWK2HFESfgsiIIVIKgYlTlkmH%2br/2A886Qb9YIF/fYXz3R3mSQzo2kQ32Q5cmwPhOB3jNOH97O8ebN0sKg3FemMQsw5S4IWd2mCyvMH33F0ze/ITB5QPyiyuawxWxw076imx1h6zI6BRnWFKAm80A%2b92IZkIBU5ADmkVBcuwuagzLv4UzfFYDjK5DR9inJhTyg6YdROiFMbpEeL7fozPrUht6RHcukV0PPdZdrwvPtUkObNdHR87P5cfSYLiAzbpYq%2bv58iOqSV5ElTwP%2bGxTrum5XEeQ10NAMOWTxDN/q/D4LBASWF85/x617353BD6173//%2bF2fbeJG9/wV6yT5b4BSZoCC5P8BYqyYI%2bm78tZWtAuQ1Sg/kJZXbPWDozuhw38ElRP84mGw4/Vl%2bBOO0ObJO0kuL0DE5aPQBHGqonTTgmXKpMmRpyg0QmpFv48utcYR%2bUI6kPOlY%2b1HhMHGr3ZoX1YDhA8YLFHs3mL68Ff6gA1t%2bJ7h7Uf5A8T04Sf6h3uGuAt6%2bRsCpDfkl8QGfWL/KTZ0YrOLJUJGkPHtBwmiRBjM9%2b8xutihYKLVeOHP4p8VgMUEpcLgjfrhwoNqW37XV8qLkOoyRNy2NhuPHx0a5W2wUGdBUn0Pc4QpNOX/AiJHeJIKfw0CEMhL2Jkf%2bBKXC768%2bFDL215ifiEY9fCJSTW0xwsOzhc5wbO/uKinn6XU443T15AKnwhAlac2XcxxvdxjFV9gm22R2X0EhKjufgmdYckSX2r2C1iLEfT5BFoYIJvvcLGclhnkr1Drr04A4uUDbshlTm9qFuyeD8s0GaaI9%2bMA/TRETNTmMQ8IWE%2bKiBrjkDzEcSi/uljP5PhfMx19wOllqEiB3cGUqG4kv%2betifw2i5wZYIH1usDV1QS7VUE4nBG4jNk2wmI2IZIbybu%2bVusbE4DQAOGVHcdFl6Gs%2bm6viXbxsxT5hrzwaB2piteP3/jVT3L6b0cAwutzgy7Rm0h3/5MQ9bmfD74JAXRtG/0oLNNN6chaRzT4ny76TWmALn5uFD8rHk79W9vAf/%2bjpLiUfKLy/08C%2bCfsZ0wLTNOjyQAAAABJRU5ErkJggg==' /%3e%3c/svg%3e)

- In fact, if you look up the view page, you can see information such as overall reservation status, payment status, and number of reviews for all rooms.

· API Gateway

- After adding the gateway Spring Boot App, add routes for each microservice in application.yaml and set the gateway server port to 8080.

- application.yaml example

spring:

profiles: docker

cloud:

gateway:

routes:

- id: payment

uri: http://payment:8080

predicates:

- Path=/payments/**

- id: room

uri: http://room:8080

predicates:

- Path=/rooms/**, /reviews/**, /check/**

- id: reservation

uri: http://reservation:8080

predicates:

- Path=/reservations/**

- id: message

uri: http://message:8080

predicates:

- Path=/messages/**

- id: viewpage

uri: http://viewpage:8080

predicates:

- Path= /roomviews/**

globalcors:

corsConfigurations:

'[/**]':

allowedOrigins:

- "*"

allowedMethods:

- "*"

allowedHeaders:

- "*"

allowCredentials: true

server:

port: 8080 - Write Deployment.yaml for Kubernetes and create Deploy on Kubernetes

- Deployment.yaml example

apiVersion: apps/v1

kind: Deployment

metadata:

name: gateway

namespace: airbnb

labels:

app: gateway

spec:

replicas: 1

selector:

matchLabels:

app: gateway

template:

metadata:

labels:

app: gateway

spec:

containers:

- name: gateway

image: 247785678011.dkr.ecr.us-east-2.amazonaws.com/gateway:1.0

ports:

- containerPort: 8080- Create a Deploy

kubectl apply -f deployment.yaml- Deploy created in Kubernetes. Confirm

- Create a Service.yaml for Kubernetes and create a Service/LoadBalancer in Kubernetes to check the Gateway endpoint

- Service.yaml example

apiVersion: v1

kind: Service

metadata:

name: gateway

namespace: airbnb

labels:

app: gateway

spec:

ports:

- port: 8080

targetPort: 8080

selector:

app: gateway

type:

LoadBalancer - Create Service

kubectl apply -f service.yaml - Check API Gateway endpoint

- Check Service and endpoint

kubectl get svc -n airbnb

Correlation

In the Airbnb project, the Correlation-key implementation for distinguishing the type of processing in PolicyHandler is passed as a variable in the event class to accurately implement the related processing between services.

Take a look at the implementation example below

When you make a reservation, the status of services such as room and payment are appropriately changed at the same time. You can check that the data such as the state value is changed to the appropriate state.

reservation registration

After booking - room condition

After booking - room condition

After reservation - reservation status

After reservation - reservation status

After booking - payment status

After booking - payment status

cancel reservation

cancel reservation

After Cancellation - Room Status

After Cancellation - Room Status

After Cancellation - Reservation Status

After Cancellation - Reservation Status

After Cancellation - Payment Status

After Cancellation - Payment Status

· Synchronous call (Sync) and Fallback handling

As one of the conditions in the analysis stage, it was decided to process the reservation availability status check call between rooms when making a reservation as a transaction that maintains synchronous consistency. The calling protocol allows the REST service already exposed by the Rest Repository to be called using FeignClient. Also, the reservation -> payment service was decided to be processed synchronously.

- Implement the service proxy interface (Proxy) using stub and (FeignClient) to call the room and payment service

# PaymentService.java

package airbnb.external;

<omit the import statement>

@FeignClient(name="Payment", url="${prop.room.url}")

public interface PaymentService {

@RequestMapping(method= RequestMethod.POST, path="/payments")

public void approvePayment(@RequestBody Payment payment);

}

# RoomService.java

package airbnb.external;

<omit the import statement>

@FeignClient(name="Room", url="${prop.room.url}")

public interface RoomService {

@RequestMapping(method= RequestMethod.GET, path="/check/chkAndReqReserve")

public boolean chkAndReqReserve(@RequestParam("roomId") long roomId);

}- Immediately after receiving the reservation request (@PostPersist), check the availability and process the payment request synchronously (Sync)

# Reservation.java (Entity)

@PostPersist

public void onPostPersist(){

////////////////////////////////

// When INSERTed into RESERVATION

////////////////////////////////

////////////////////////////////////

// When a reservation request (reqReserve) is received

////////////////////////////////////

// Check if the ROOM is available

boolean result = ReservationApplication.applicationContext.getBean(airbnb.external.RoomService.class)

.chkAndReqReserve(this.getRoomId());

System.out.println("######## Check Result : " + result);

if(result) {

// If reservation is available

//////////////////////////////

// PAYMENT payment in progress (POST method) - SYNC call

//////////////////////////////

airbnb.external.Payment payment = new airbnb.external.Payment();

payment.setRsvId(this.getRsvId());

payment.setRoomId(this.getRoomId());

payment.setStatus("paid");

ReservationApplication.applicationContext.getBean(airbnb.external.PaymentService.class)

.approvePayment(payment);

/////////////////////////////////////

// Event publication --> ReservationCreated

/////////////////////////////////////

ReservationCreated reservationCreated = new ReservationCreated();

BeanUtils.copyProperties(this, reservationCreated);

reservationCreated.publishAfterCommit();

}

}- Confirm that synchronous calls result in time coupling with the time of the call, and that orders cannot be taken if the payment system fails:

# Pause the pay service temporarily (ctrl+c)

# Reservation request

http POST http://localhost:8088/reservations roomId=1 status=reqReserve #Fail

# Restart payment service

cd payment

mvn spring-boot:run

# Reservation request

http POST http://localhost:8088/reservations roomId=1 status=reqReserve #Success- Also, in case of excessive request, service failure can occur like dominoes. (Circuit breaker and fallback processing will be explained in the operation phase.)

· Asynchronous Invocation / Temporal Decoupling / Failure Isolation / Eventual Consistency Test

After payment is made, the status of the accommodation system is updated, the status of the reservation system is updated, and communication with the system to which reservation and cancellation messages are transmitted is handled asynchronously.

- For this, when the payment is approved, an event indicating that the payment has been approved is transmitted to Kafka. (Publish)

# Payment.java

package airbnb;

import javax.persistence.*;

import org.springframework.beans.BeanUtils;

@Entity

@Table(name="Payment_table")

public class Payment {

....

@PostPersist

public void onPostPersist(){

////////////////////////////

// If payment is approved

////////////////////////////

// Event publication -> PaymentApproved

PaymentApproved paymentApproved = new PaymentApproved();

BeanUtils.copyProperties(this, paymentApproved);

paymentApproved.publishAfterCommit();

}

....

}- Reservation system implements PolicyHandler to receive payment approval event and handle its own policy:

# Reservation.java

package airbnb;

@PostUpdate

public void onPostUpdate(){

....

if(this.getStatus().equals("reserved")) {

////////////////////

// When the reservation is confirmed

////////////////////

// event occurs --> ReservationConfirmed

ReservationConfirmed reservationConfirmed = new ReservationConfirmed();

BeanUtils.copyProperties(this, reservationConfirmed);

reservationConfirmed.publishAfterCommit();

}

....

}Other message services are completely separated from reservation/payment and are processed according to event reception, so there is no problem in receiving reservations even if the message service is temporarily down due to maintenance.

# Pause message service (ctrl+c)

# Reservation request

http POST http://localhost:8088/reservations roomId=1 status=reqReserve #Success

# Check your reservation status

http GET localhost:8088/reservations #Regardless of the message service, the reservation status is normaloperation

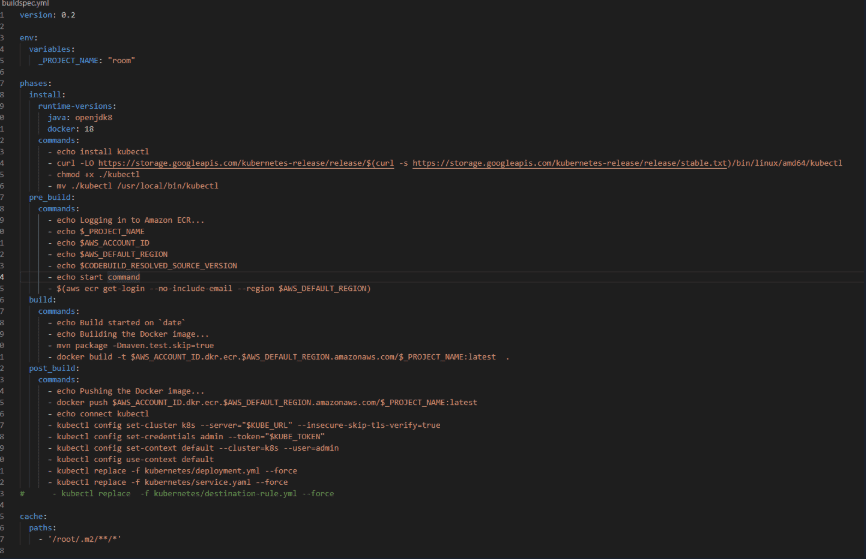

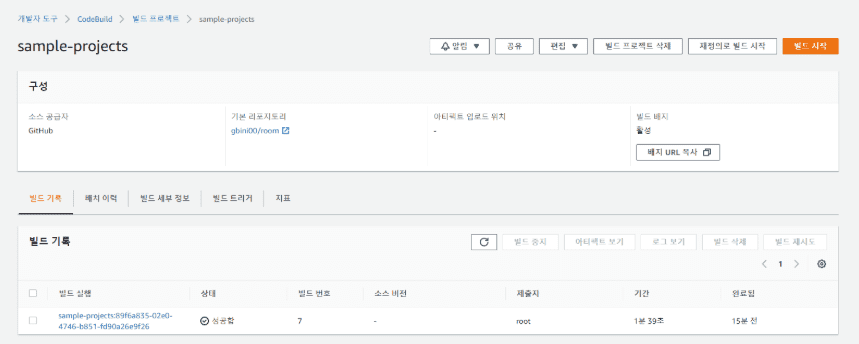

· CI/CD settings

Each implementation was configured in their own source repository, and the CI/CD used was AWS codebuild using buildspec.yml.

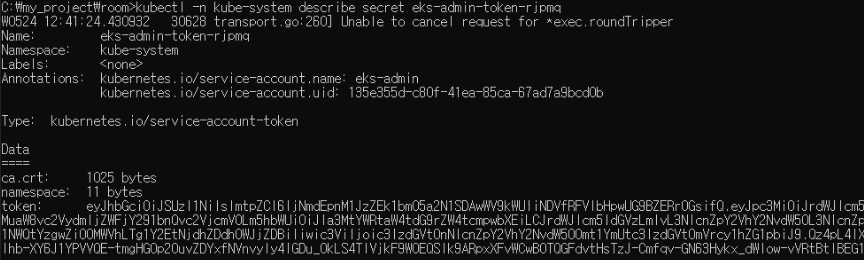

- Create a CodeBuild project and set the AWS_ACCOUNT_ID, KUBE_URL, and KUBE_TOKEN environment variables.

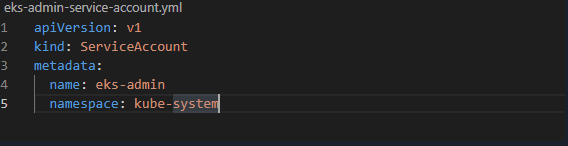

SA creation

kubectl apply -f eks-admin-service-account.yml

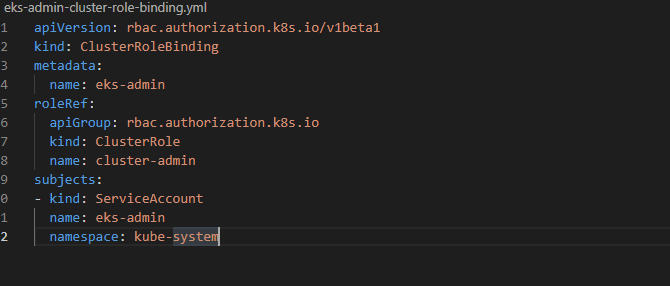

Create Role

kubectl apply -f eks-admin-cluster-role-binding.yml

Token confirmation

kubectl -n kube-system get secret

kubectl -n kube-system describe secret eks-admin-token-rjpmq

buildspec.yml file

Set to use the yml file of the microservice room

- run codebuild

codebuild project and build history

- codebuild build history (Message service details)

- codebuild build history (view full history)

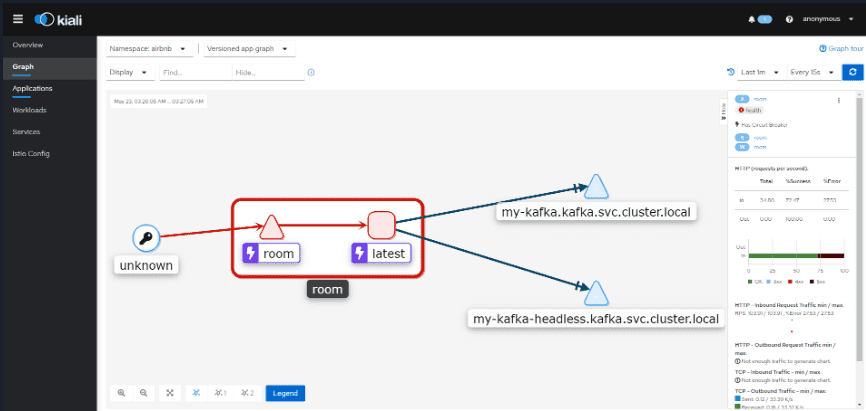

· Synchronous Call / Circuit Breaking / Fault Isolation

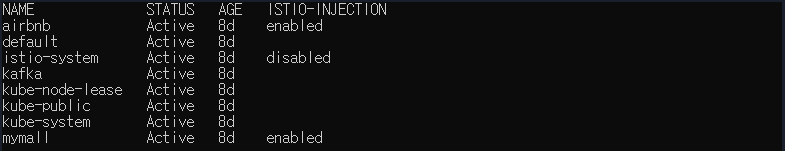

- Circuit Breaking Framework of choice: implemented using istio

The scenario is implemented by linking the connection at reservation--> room with RESTful Request/Response, and when the reservation request is excessive, fault isolation through CB.

- Create a DestinationRule to allow circuit break to occur. Set a minimum connection pool.

# destination-rule.yml

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: dr-room

namespace: airbnb

spec:

host: room

trafficPolicy:

connectionPool:

http:

http1MaxPendingRequests: 1

maxRequestsPerConnection: 1

# outlierDetection:

# interval: 1s

# consecutiveErrors: 1

# baseEjectionTime: 10s

# maxEjectionPercent: 100- Activate istio-injection and check the room pod container

kubectl get ns -L istio-injection

kubectl label namespace airbnb istio-injection=enabled

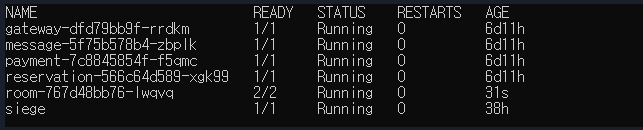

- Check circuit breaker operation with load tester siege tool:

run siege

kubectl run siege --image=apexacme/siege-nginx -n airbnb

kubectl exec -it siege -c siege -n airbnb -- /bin/bash- When load is created with concurrent user 1, everything is normal.

siege -c1 -t10S -v --content-type "application/json" 'http://room:8080/rooms POST {"desc": "Beautiful House3"}'

** SIEGE 4.0.4

** Preparing 1 concurrent users for battle.

The server is now under siege...

HTTP/1.1 201 0.49 secs: 254 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.05 secs: 254 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.02 secs: 254 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.03 secs: 254 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.02 secs: 254 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.02 secs: 254 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.03 secs: 254 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.03 secs: 254 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.03 secs: 254 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.03 secs: 256 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.03 secs: 256 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.02 secs: 256 bytes ==> POST http://room:8080/rooms- 168 503 errors occurred when creating a load with simultaneous user 2

siege -c2 -t10S -v --content-type "application/json" 'http://room:8080/rooms POST {"desc": "Beautiful House3"}'

** SIEGE 4.0.4

** Preparing 2 concurrent users for battle.

The server is now under siege...

HTTP/1.1 201 0.02 secs: 258 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.02 secs: 258 bytes ==> POST http://room:8080/rooms

HTTP/1.1 503 0.10 secs: 81 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.02 secs: 258 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.04 secs: 258 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.05 secs: 258 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.22 secs: 258 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.08 secs: 258 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.07 secs: 258 bytes ==> POST http://room:8080/rooms

HTTP/1.1 503 0.01 secs: 81 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.01 secs: 258 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.03 secs: 258 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.02 secs: 258 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.01 secs: 258 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.02 secs: 258 bytes ==> POST http://room:8080/rooms

HTTP/1.1 503 0.01 secs: 81 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.01 secs: 258 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.02 secs: 258 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.02 secs: 258 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.02 secs: 258 bytes ==> POST http://room:8080/rooms

HTTP/1.1 503 0.00 secs: 81 bytes ==> POST http://room:8080/rooms

Lifting the server siege...

Transactions: 1904 hits

Availability: 91.89 %

Elapsed time: 9.89 secs

Data transferred: 0.48 MB

Response time: 0.01 secs

Transaction rate: 192.52 trans/sec

Throughput: 0.05 MB/sec

Concurrency: 1.98

Successful transactions: 1904

Failed transactions: 168

Longest transaction: 0.03

Shortest transaction: 0.00- check circuit break on kiali screen

- Check the load again with the minimum connection pool again

** SIEGE 4.0.4

** Preparing 1 concurrent users for battle.

The server is now under siege...

HTTP/1.1 201 0.01 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.01 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.01 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.03 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.00 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.02 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.01 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.01 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.01 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.00 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.01 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.01 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.01 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.00 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.01 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.01 secs: 260 bytes ==> POST http://room:8080/rooms

:

:

Lifting the server siege...

Transactions: 1139 hits

Availability: 100.00 %

Elapsed time: 9.19 secs

Data transferred: 0.28 MB

Response time: 0.01 secs

Transaction rate: 123.94 trans/sec

Throughput: 0.03 MB/sec

Concurrency: 0.98

Successful transactions: 1139

Failed transactions: 0

Longest transaction: 0.04

Shortest transaction: 0.00- The operating system does not die and continuously shows that the circuit is properly opened and closed by CB to protect the resource. Post-processing to expand the system through virtualhost configuration and dynamic scale out (automatic addition of replica, HPA) is required.

Auto Scale Out Previously CB enabled stable operation of the system, but it did not accept 100% of the user's request, so we want to apply an automated extension function as a complement to this.

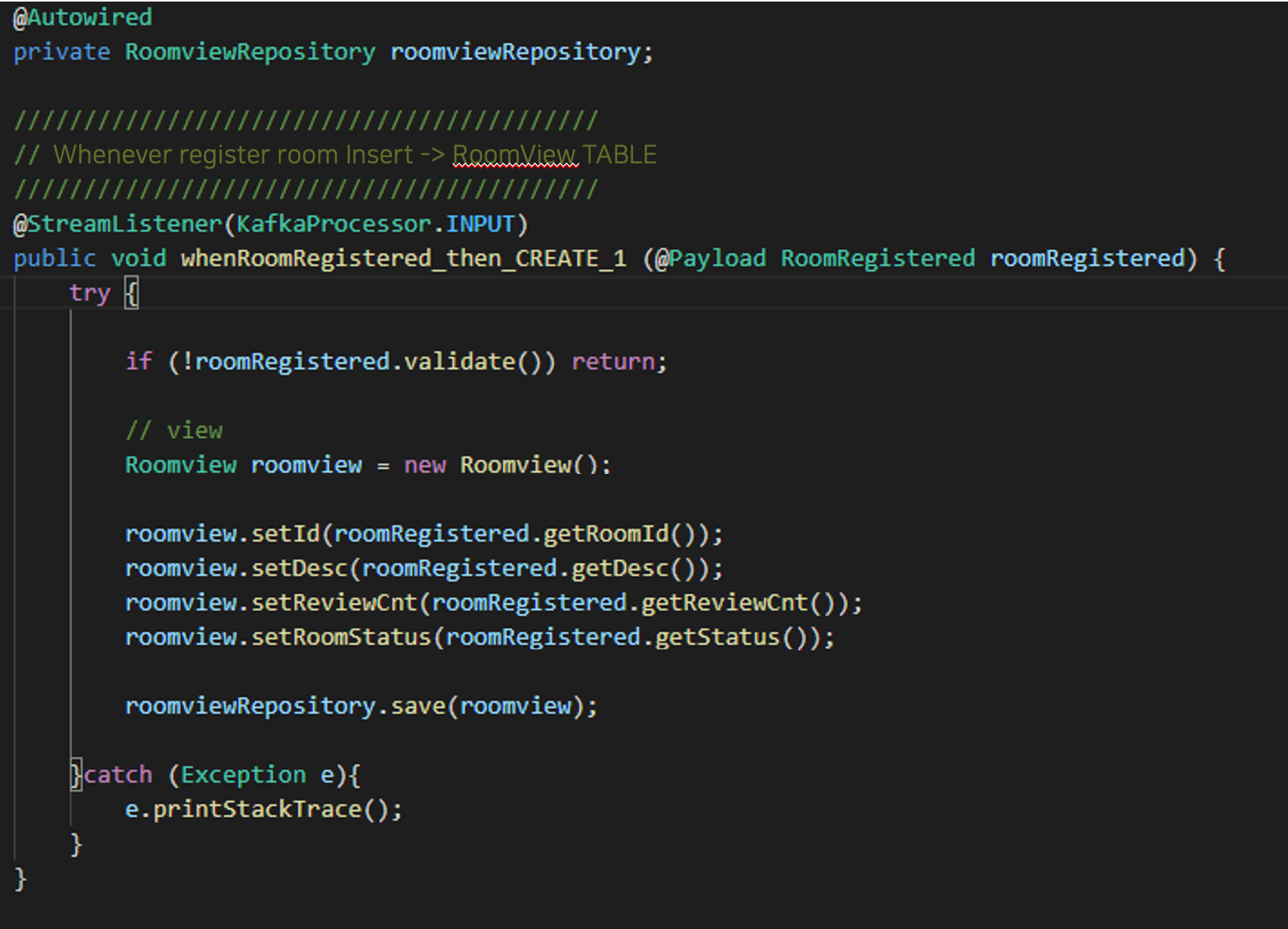

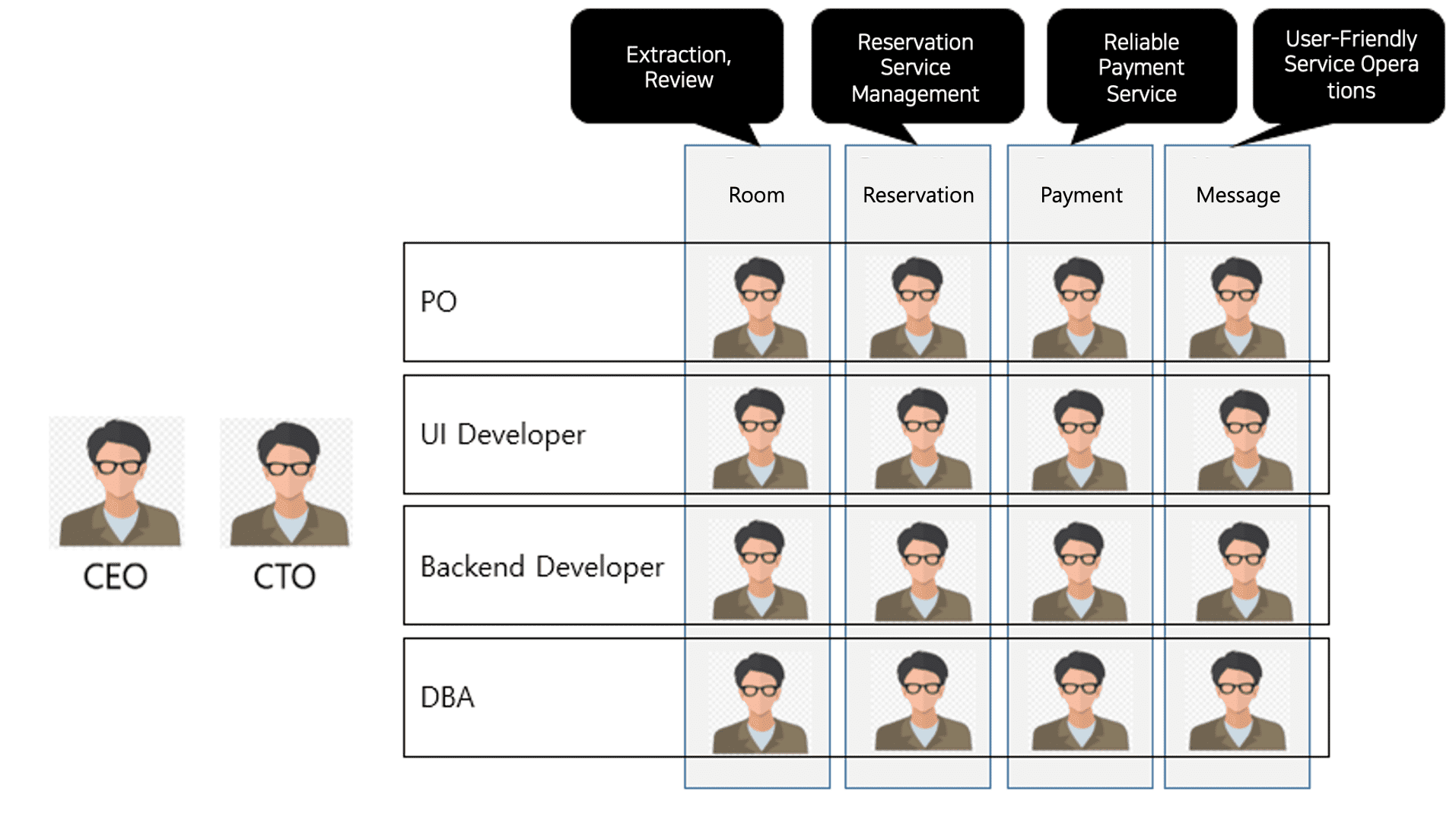

- Add resources configuration to room deployment.yml file

' width='640' height='250' xlink:href='data:image/png%3bbase64%2ciVBORw0KGgoAAAANSUhEUgAAAEAAAAAZCAYAAACB6CjhAAAACXBIWXMAAAsSAAALEgHS3X78AAAF5ElEQVRYw91Y11IcSRDUV4jdMTve%2b/WGZQXCyhxnFNLp5eL%2b/yfysnpmAHEIQdwRQnrIaDNts6u6s%2baFG1UI0jGcsIAVlAiLBZrlMaJipurzeomRl8GPa7YpEedTJOUMlp/DdFOMiLxewE8a2EEBm/VB2iBgOWR/L65U3zAbq/5ZtUBazlE0S5U3nQQWxzeYptVczSnjSlkbRdCsp8ULy7IgMPUBvJyLOvwIf3aKtJnCNgYYDvZg6EPo2gCGJukQmqbBNA3CVNB1DYbBsoD1hqGr8nV6ndd1vQXHkLQdw%2bjG0VW7ftzRiDCfFi8cx4Ft25xspFLLduAEGTyehJ/PmDbwsgncpIYbl3DDBJ7rqPYWMXpCmCYxelq8kE1/QYBlI5gcIZodI1Tpa4TjHdMzBOMDBHQNz3PxcqhhqBtqkPsWO3rEYkb/cTOjO8rfIvIOAsQl2JFmKSbophMEDTdeb%2bCHEULPwSyLsV9nWBWJsoK70E/%2bf1mJ9cjv962hda82/y8Ceriuq%2bAVS8Srt4iX5yQgVATMScDRpMC2SjHQdGUNkvbYIzRah06fV3mjzQ%2b7vGGYqp3epQP6/lBv%2b/Zp31/aS761OP0KbVuOxc1IuteV%2b297bC/jyxj9uMNuzpsk3GkBfToa2epiDKoV/GqNqFnD8TwkgYfzRY1ZLkSUOJ1XOGhyHI5JSlPgeFpinITIogCXmwmaOEAa%2bFiRsEkaIqIL7dc5Ut/FhnWbMsWWFnXEfguOuaoyTLOIfTxU7Nuwz4l8K1Isy4RIsc9%2bS7YNeB9NeSC7cc5xEo6XqTGPZxWq0GebhGvKseK3dZUj850rEu4lQNUx72YzRNMjvg6fkM4PaRUOijjEm2WDORcgCz8kCe0kqVrAK%2bYnaYQxcUGiitBDncaqjeRL1X%2bsNrdjf1l4Q8J2JFDGetURWBNjbn7DNu9WjSJbCBD3u5jXivSMxC7pikL6mt%2bmnHPH%2bjPOW5JAWY%2bMuyXhGyKhBRvmAwmQC9Er17QA%2bn/NtJgiCILWJDuTGwi03szMrtyasJjvy1umqHfucNsFtK5fjysX6My6d7PexF8O9asxpO7aBYwrt%2by/3Rz3wS5g27wM%2bUSGPH25AB0KmiDn0%2bha1AMDjAxqAQHzoiHk0jS1vba%2b1widNpD3/2sX0%2b066ysXnnWrz32XrfWNsvlQC1AkmDo7G3BFL1A1RvOTDnwmZyfKPeLFGZLNe1UfTg7hRykyusPFh3Ncfn6L4zcHdKfriW8u4Gb%2be%2bAbLtA9iXwOJbXDDEG5oChq4IseyOfEVIkmJyqUcLKZBlGCgBdQM6sx4V1R0mdljO%2b92ccTQPjNrjvxM5X69ZYuseXJs56XjDHcowu07iCSWaWd2WvydBGapisx8hxO/NEE2H7cyuBOCqsyU5/Bjccn8eGTmVck/DAEKND/LS9VYihZE/uXSCiKQkrkeHqAmOaughierILR4Ya/P0fTfzgBXYBkuT6toFK%2bbgcxbC%2bkKAra2MGlqzgCR6UhhU5IvfCcN/4oAuQ1GNF84/UvyI8%2bURR9RrR8wyBpCz%2bI4PG7KK/ft3N83M7wx3aiVNxPREAriiyXpx6k6vQtx0OeZlRiIldDlJS9BW9%2bOXmrN32Z5GdwgdYCTD59C2Sv/kQx26c%2bMJSWl1P/%2b2SDv16vcLmq4fC5vJaa5hdh6Q9KALWA7aubX/xfwmOHd4C8EGavsLoN31Z3P4kL8PSdmC/ABdLdB/WTJBjvkJd8Ei2z/c3FW7/X/n1Ieltz/7AESFnee/WrLK6p/CbwsmmrC4IEtuMq35fQuGL0JpGZRGV56H0hfZ8r1D9BgWy0/z94GxIB2iQgWtAKttQB%2b79RFZ6qX2W256uobSYbbwqc8jU4m1cMmpxn7//KAvo/Pz0BdyEMeftLuzBlLLBEnBbK/CXel5j8aFrhhDhfTbBezlXY2f9tfu64IqD/DfY1AlzX4yswR8Z7IJusSYCFNAxwyI2/30zx62aCd5sZ9jdrpQyvg6nnjX8ADI6utGp4/p0AAAAASUVORK5CYII=' /%3e%3c/svg%3e)

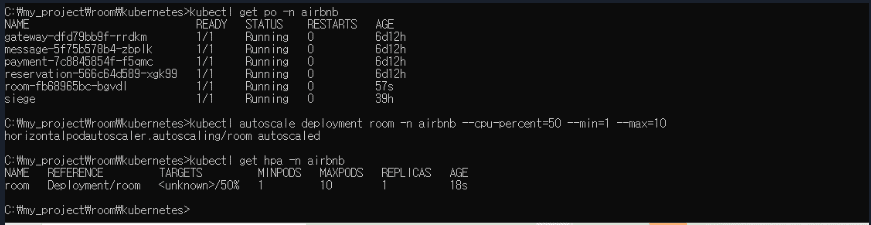

- Configure HPA to dynamically grow replicas for the room service. The setting increases the number of replicas to 10 when CPU usage exceeds 50%:

kubectl autoscale deployment room -n airbnb --cpu-percent=50 --min=1 --max=10

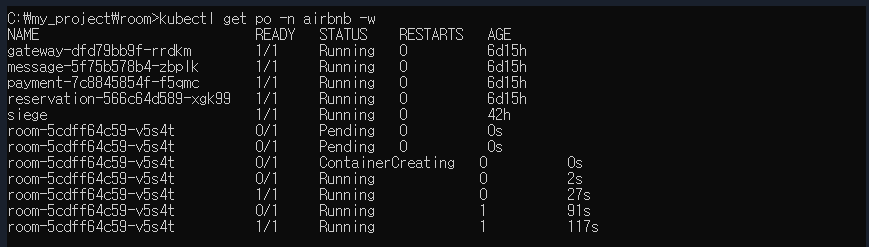

- Load 100 concurrent users for 1 minute.

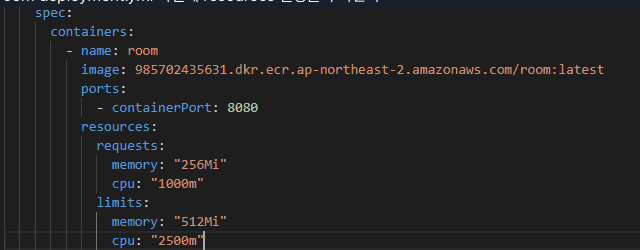

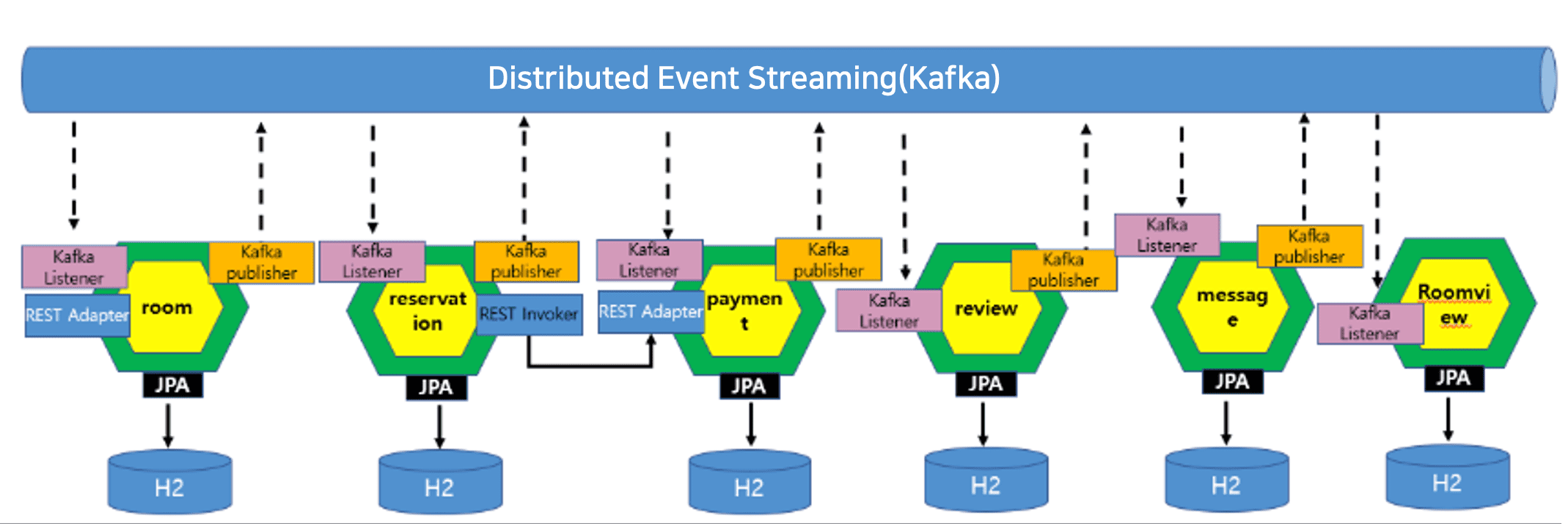

siege -c100 -t60S -v --content-type "application/json" 'http://room:8080/rooms POST {"desc": "Beautiful House3"}'- Monitor how the autoscale is going

kubectl get deploy room -w -n airbnb - After some time (about 30 seconds) you can see the scale out occurs:

' width='828' height='173' xlink:href='data:image/png%3bbase64%2ciVBORw0KGgoAAAANSUhEUgAAAEAAAAANCAYAAAAZr2hsAAAACXBIWXMAAAsSAAALEgHS3X78AAAC%2bklEQVRIx81W2XLiQAzkJSyxc3AasB3fxtx3BQi1Wfb/P6pXPckQh4JUbUIqPKg8ljUaqdXSuOD4GaIkQxh3Ua23YLVc1C17/%2byPZnC9SH3rdIdIswGs9gNajo9604HzECpp2Z6ys91A7eOz1mijLXZN%2bca1FyRqH/fXxKYhZ7RpJ%2bum6LivIT55Nt%2b51w9TdfZ91UL5jHJfaaBquShEnQEG/R7%2b7nYIAh99WQ%2bHA4xGQ4zHIyVJEmMxn%2bH5%2bTeWywUWi7mS6WSCLOtgOp2g00kxGY8xm00xn82Un2bTUjbcnyYJNus1fN9T75bVUOdxr9VoKD11jm0jDAOEQYA4iuTcOWrVKq6vSzBNA6ZxBhE/hvi7L9dQ6I%2bX2O3%2bSOAzOeQa7baNp6ctVqs1ut0urq6uUK/X8fj4CMdxkKYdDAYDCXyKsSRXKpXQkAQmk6nS%2bb6P7Xar1rYkUywWBdCh0t3d3Sl7wzRfRILhmYZh7p/U5aUketO8wc3NeYUgkAUF109VJdI0xe3tLaqCNhO1LEsFa0qgBIB6/W4oFN%2bCJQCVSkWtX5y/t%2bn1egpMCm213f8FbJ4XAEMDEHSQxJEKkhViooHQLwzD16oYqNVqCoRjgWsAuO/Ydwbe7/eldZbwPE%2bd8Zlkvg2AIO6pPh5L/%2bYBiKT/8gBQPgIgz4DDoOl7s9koAOjzOxL6IgPiPQOYKKsfi04DwOqfYgBt2C7HGKCT5MxYrVZwXfdT9P92AKIoFBZk7xiQB%2bCrDKBvtsBFAvAQZnJFxapPCQAT4STXALzcDG01GDkEjwFAdnwEANlFBtDHxbWAH3WRyR0%2bGo32LcBprWeABoBX2jEAdAu0Wq19cocATOS6XMs/AFvhGFA/ywDOgORtBrCaDDTPACZ/igH8zuTz1T0EgOwiAByGp26LHwPA9pLXv7NEAVAul9UMYNL5e55VPkxQtwCryu%2bnrkH606y6OAAqdaH2r6JKjsFSdOXzSeZ/cg4rrH94DnV6TV9kjz7jkmbAP6566axH2w4LAAAAAElFTkSuQmCC' /%3e%3c/svg%3e)

- If you look at the log of siege, you can see that the overall success rate has increased.

Lifting the server siege...

Transactions: 15615 hits

Availability: 100.00 %

Elapsed time: 59.44 secs

Data transferred: 3.90 MB

Response time: 0.32 secs

Transaction rate: 262.70 trans/sec

Throughput: 0.07 MB/sec

Concurrency: 85.04

Successful transactions: 15675

Failed transactions: 0

Longest transaction: 2.55

Shortest transaction: 0.01· Uninterrupted redistribution

- First, remove Autoscaler or CB settings to check whether non-stop redistribution is 100%.

kubectl delete destinationrules dr-room -n airbnb

kubectl label namespace airbnb istio-injection-

kubectl delete hpa room -n airbnb- Monitoring the workload right before deployment with seige.

siege -c100 -t60S -r10 -v --content-type "application/json" 'http://room:8080/rooms POST {"desc": "Beautiful House3"}'

** SIEGE 4.0.4

** Preparing 1 concurrent users for battle.

The server is now under siege...

HTTP/1.1 201 0.01 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.01 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.01 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.03 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.00 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.02 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.01 secs: 260 bytes ==> POST http://room:8080/rooms

HTTP/1.1 201 0.01 secs: 260 bytes ==> POST http://room:8080/rooms- Start deploying to a new version

kubectl set image ...- Go to seige's screen and check if Availability has dropped below 100%

siege -c100 -t60S -r10 -v --content-type "application/json" 'http://room:8080/rooms POST {"desc": "Beautiful House3"}'

Transactions: 7732 hits

Availability: 87.32 %

Elapsed time: 17.12 secs

Data transferred: 1.93 MB

Response time: 0.18 secs

Transaction rate: 451.64 trans/sec

Throughput: 0.11 MB/sec

Concurrency: 81.21

Successful transactions: 7732

Failed transactions: 1123

Longest transaction: 0.94

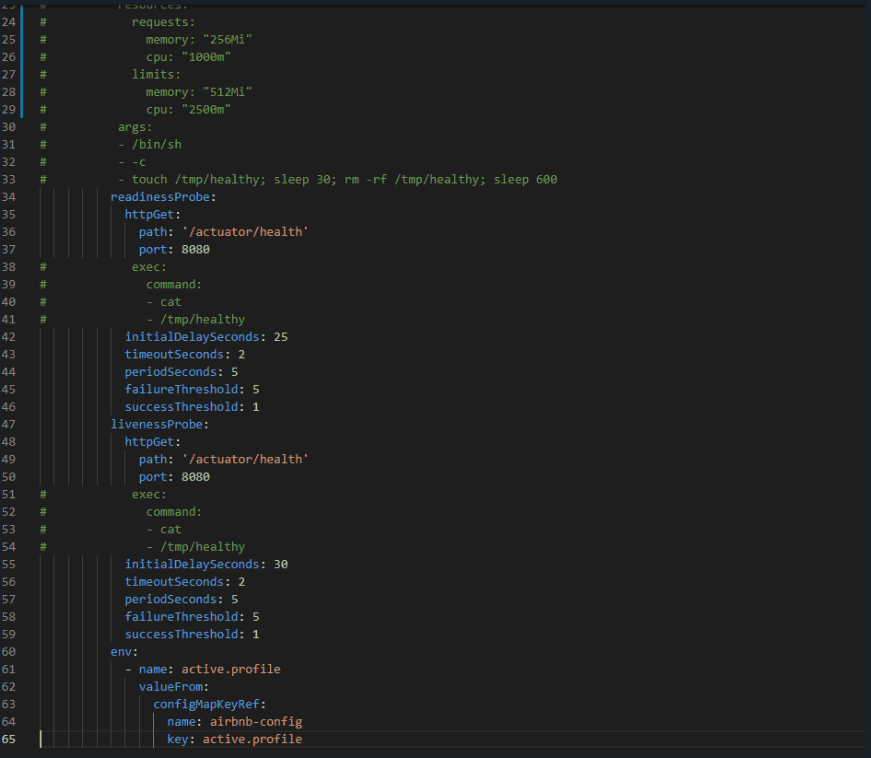

Shortest transaction: 0.00- During the distribution period, it was confirmed that the availability fell from 100% to 87%. The reason is that Kubernetes recognized the newly uploaded service as a READY state and proceeded to introduce the service. To prevent this, readiness probe is set.

# Configure readiness probe in deployment.yaml:

kubectl apply -f kubernetes/deployment.yml- Check Availability after redeploying with the same scenario:

Lifting the server siege...

Transactions: 27657 hits

Availability: 100.00 %

Elapsed time: 59.41 secs

Data transferred: 6.91 MB

Response time: 0.21 secs

Transaction rate: 465.53 trans/sec

Throughput: 0.12 MB/sec

Concurrency: 99.60

Successful transactions: 27657

Failed transactions: 0

Longest transaction: 1.20

Shortest transaction: 0.00Uninterrupted redistribution is confirmed to be successful because availability does not change during the distribution period.

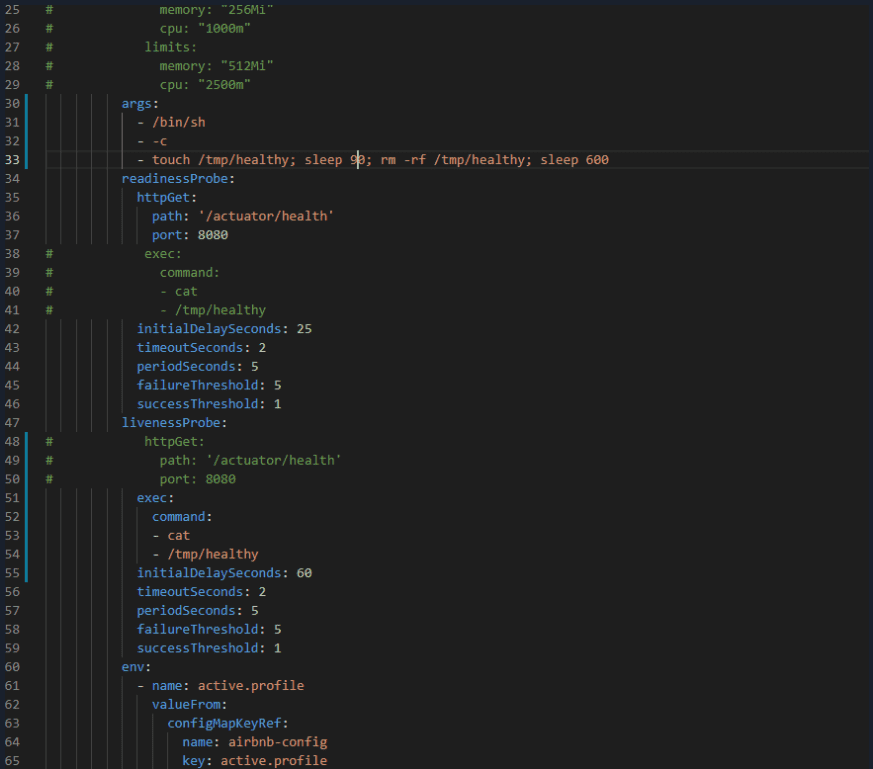

Self-healing (Liveness Probe)

- Edit room deployment.yml file

After running the container, create a /tmp/healthy file

Delete after 90 seconds

Make livenessProbe validate with 'cat /tmp/healthy'

- Check by running kubectl describe pod room -n airbnb

After 90 seconds of running the container, the driver is normal, but after that, the /tmp/healthy file is deleted and livenessProbe returns a failure.

If the pod is in a normal state, enter the pod and create a /tmp/healthy file to maintain the normal state.

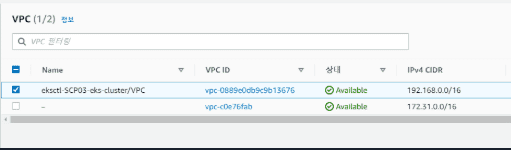

Config Map/ Persistence Volume

- Persistence Volume

1: Create EFS

You must select a VPC for your cluster when creating EFS

- EFS account creation and ROLE binding

kubectl apply -f efs-sa.yml

apiVersion: v1

kind: ServiceAccount

metadata:

name: efs-provisioner

namespace: airbnb

kubectl get ServiceAccount efs-provisioner -n airbnb

NAME SECRETS AGE

efs-provisioner 1 9m1s

kubectl apply -f efs-rbac.yaml

You must edit the namespace

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: efs-provisioner-runner

namespace: airbnb

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-efs-provisioner

namespace: airbnb

subjects:

- kind: ServiceAccount

name: efs-provisioner

# replace with namespace where provisioner is deployed

namespace: airbnb

roleRef:

kind: ClusterRole

name: efs-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-efs-provisioner

namespace: airbnb

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-efs-provisioner

namespace: airbnb

subjects:

- kind: ServiceAccount

name: efs-provisioner

# replace with namespace where provisioner is deployed

namespace: airbnb

roleRef:

kind: Role

name: leader-locking-efs-provisioner

apiGroup: rbac.authorization.k8s.io- Deploy EFS Provisioner

kubectl apply -f efs-provisioner-deploy.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: efs-provisioner

namespace: airbnb

spec:

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: efs-provisioner

template:

metadata:

labels:

app: efs-provisioner

spec:

serviceAccount: efs-provisioner

containers:

- name: efs-provisioner

image: quay.io/external_storage/efs-provisioner:latest

env:

- name: FILE_SYSTEM_ID

value: fs-562f9c36

- name: AWS_REGION

value: ap-northeast-2

- name: PROVISIONER_NAME

value: my-aws.com/aws-efs

volumeMounts:

- name: pv-volume

mountPath: /persistentvolumes

volumes:

- name: pv-volume

nfs:

server: fs-562f9c36.efs.ap-northeast-2.amazonaws.com

path: /

kubectl get Deployment efs-provisioner -n airbnb

NAME READY UP-TO-DATE AVAILABLE AGE

efs-provisioner 1/1 1 1 11m- Register the installed provisioner to storageclass

kubectl apply -f efs-storageclass.yml

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: aws-efs

namespace: airbnb

provisioner: my-aws.com/aws-efs

kubectl get sc aws-efs -n airbnb

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

aws-efs my-aws.com/aws-efs Delete Immediate false 4s- Create a PersistentVolumeClaim (PVC)

kubectl apply -f volume-pvc.yml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: aws-efs

namespace: airbnb

labels:

app: test-pvc

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 6Ki

storageClassName: aws-efs

kubectl get pvc aws-efs -n airbnb

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

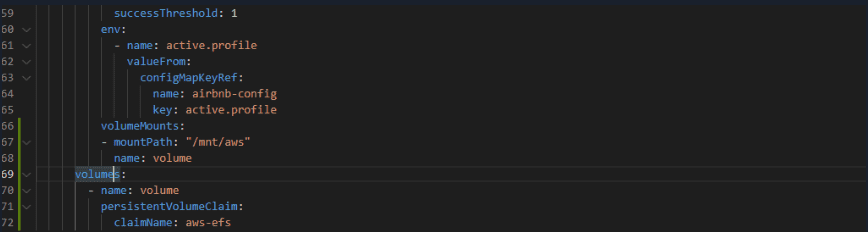

aws-efs Bound pvc-43f6fe12-b9f3-400c-ba20-b357c1639f00 6Ki RWX aws-efs 4m44s- room pod application

kubectl apply -f deployment.yml

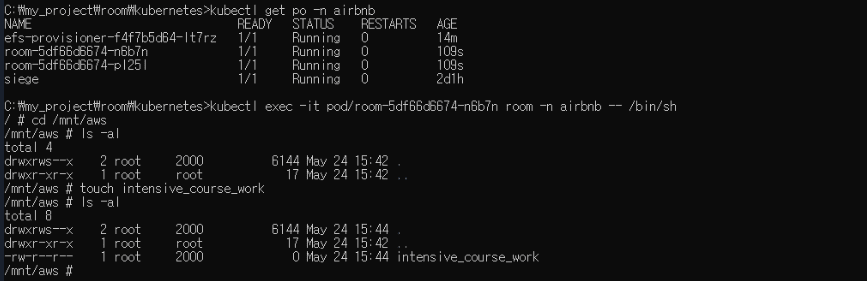

- Create a file in the mounted path in pod A and check the file in pod B

NAME READY STATUS RESTARTS AGE

efs-provisioner-f4f7b5d64-lt7rz 1/1 Running 0 14m

room-5df66d6674-n6b7n 1/1 Running 0 109s

room-5df66d6674-pl25l 1/1 Running 0 109s

siege 1/1 Running 0 2d1h

kubectl exec -it pod/room-5df66d6674-n6b7n room -n airbnb -- /bin/sh

/ # cd /mnt/aws

/mnt/aws # touch intensive_course_work

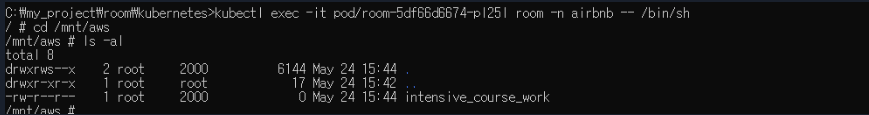

kubectl exec -it pod/room-5df66d6674-pl25l room -n airbnb -- /bin/sh

/ # cd /mnt/aws

/mnt/aws # ls -al

total 8

drwxrws--x 2 root 2000 6144 May 24 15:44 .

drwxr-xr-x 1 root root 17 May 24 15:42 ..

-rw-r--r-- 1 root 2000 0 May 24 15:44 intensive_course_work

- Config Map

1: Create cofingmap.yml file

kubectl apply -f cofingmap.yml

apiVersion: v1

kind: ConfigMap

metadata:

name: airbnb-config

namespace: airbnb

data:

# 단일 key-value

max_reservation_per_person: "10"

ui_properties_file_name: "user-interface.properties"- Apply to deployment.yml

kubectl apply -f deployment.yml

.......

env:

# single key-value in cofingmap

- name: MAX_RESERVATION_PER_PERSION

valueFrom:

configMapKeyRef:

name: airbnb-config

key: max_reservation_per_person

- name: UI_PROPERTIES_FILE_NAME

valueFrom:

configMapKeyRef:

name: airbnb-config

key: ui_properties_file_name

volumeMounts:

- mountPath: "/mnt/aws"

name: volume

volumes:

- name: volume

persistentVolumeClaim:

claimName: aws-efs